Many of us often face difficulties understanding the dataset size limits in different scenarios and different capacities. So, here is a quick understanding of the same.

Different type of capacities:

1. Shared Capacity

2. Premium Capacity

3. Azure Power BI Embedded Capacity

Power BI provides either Shared Capacity or Premium Capacity. Power BI, by default, provides a shared tenant for all the Free & Pro accounts all around the world. Premium Capacity provides a dedicated capacity to the workspaces which have been assigned Premium Capacity.

Then, what is Azure Power BI Embedded Capacity?

Well, in the context of dataset size, both Premium and Azure Power BI Embedded capacities provide the same limits.

Let’s discuss the limits provided by different capacities:

Free & Pro accounts

A Free or Pro account provides workspace size limit of 10 GB per workspace, 10 GB per user, and 1 GB size limit per dataset. Let’s try to understand this in a bit of detail.

For a free user, as there will be no App Workspaces, the limit of 10GB per workspace & 10GB per user will go hand-in-hand.

For a Pro user, as the user can create App Workspaces, he can have data up to a maximum of 10 GB across all his workspaces – as this is capped by the 10GB limit per User. In case of a single Workspace, he can not have more than 10GB of data.

Premium Capacity / Embedded Capacity

For a Workspace which is backed by a Premium/Embedded capacity, the size limit is increased to 100 TB from 10GB. This is actually, the maximum allowed data size across all the workspaces backed by the same capacity, and the size of the dataset in the workspace that is backed by the capacity is increased to 10 GB from 1GB.

Please note that the dataset size limit will still be 1GB if the workspace in which the dataset resides is not backed by the capacity and only a Pro User can have advantages of Premium Capacity.

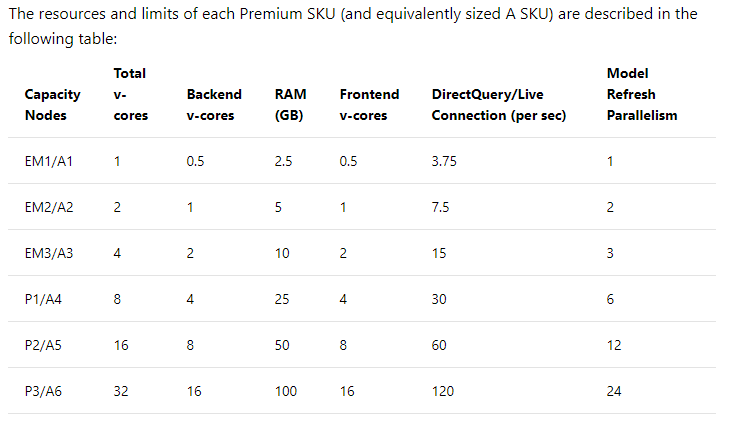

There are different SKUs in Premium Capacity which offer different features but provides the same storage size. The below image summarizes them well.

So, how can we decide which capacity to buy?

So, how can we decide which capacity to buy?

Calculating how much capacity is needed is based on multiple parameters like the specific data models used, the number and complexity of queries, the hourly distribution of the usage of your application, the data refresh rates and other usage patterns that are hard to predict.

Out of these parameters, developers can only control how to do modeling such that most of the data is compressed and reduce the complexity of queries. About 85-95% compression is done by VertiPaq In-memory engine in Power BI.

However the compression rate isn’t always the same, it depends on the type of data set, the data type of columns, etc. Hence, we should use such modeling techniques which helps Power BI to maximize the compression rate.

Also, the important point here is to see that the dataset size here is for storage but not memory. Data will be expanded when they are loaded to memory, and when we store them in storage, they will be compressed. When refreshed, data is compressed and optimized and then stored to disk by the VertiPaq storage engine. When loaded from disk into memory, it is possible to see 10x compression, and so it is reasonable to expect that 10 GB of source data can compress to about 1 GB in size. Storage size on disk can achieve a 20% reduction on top this.

A full refresh can use approximately double the amount of memory required by the model. This ensures the model can be queried even when being processed because queries are sent to the existing model until the refresh has completed and the new model data is available. Hence, we also need to look into the original data size and if it provides enough memory to refresh the dataset.

You can visit the following link to get to know more about the data compression:

https://docs.microsoft.com/en-us/power-bi/power-bi-reports-performance

https://www.microsoftpressstore.com/articles/article.aspx?p=2449192&seqNum=3

Hope, this gives a fair idea on the size limits to consider while deciding on the capacity. Please feel free to add more in the comments section below in case I have missed anything worth considering.

© All Rights Reserved. Inkey IT Solutions Pvt. Ltd. 2024

Hello,

Sorry but I am still trying to understand what is the difference between the Model size (Dataset) and Maximum storage. If I understood your text well that means that you have (from PRO perspective):

1. 1GB per Workspace, maximum 10 Workspace (with one GB) in total 10 GB

2. Or if the designer create App Workspace he can have data up to max of 10GB together with the single Workspace (in total for mix 10GB)

However from Premium Capacity P1 we have only 25GB of Model size but how much for the Maximum Storage? Could you give me some example in that situation?

I am little confused because I do not understand well differences between Model size and Maximum Storage. Could you help me with the question?

Thanks

Hello Nebo,

I understand your confusion. Please make a note that this blog was written almost 2.5yrs ago and things in the Power BI world has changed a lot since then.

I will share a couple of links with you which will surely clarify things that holds true as-on-date –

https://community.powerbi.com/t5/Service/Capacity-nodes-for-Premium-Gen2-Preview-P1/m-p/2031900#M136899

https://docs.microsoft.com/en-us/power-bi/admin/service-premium-gen2-what-is#limitations-in-premium-gen2

I hope, this helps!